Planar Data Classification

I have been working on a deep learning specific course through Coursera from Andrew Ng's DeepLearning.ai.

One of the projects in the third week is on planar data classification where you develop a deep learning network from scratch. It was pretty in-depth and during the course I went over each little piece of math to build the network including what Andrew Ng calls some of the most complicated derivative calculus which is back propagation.

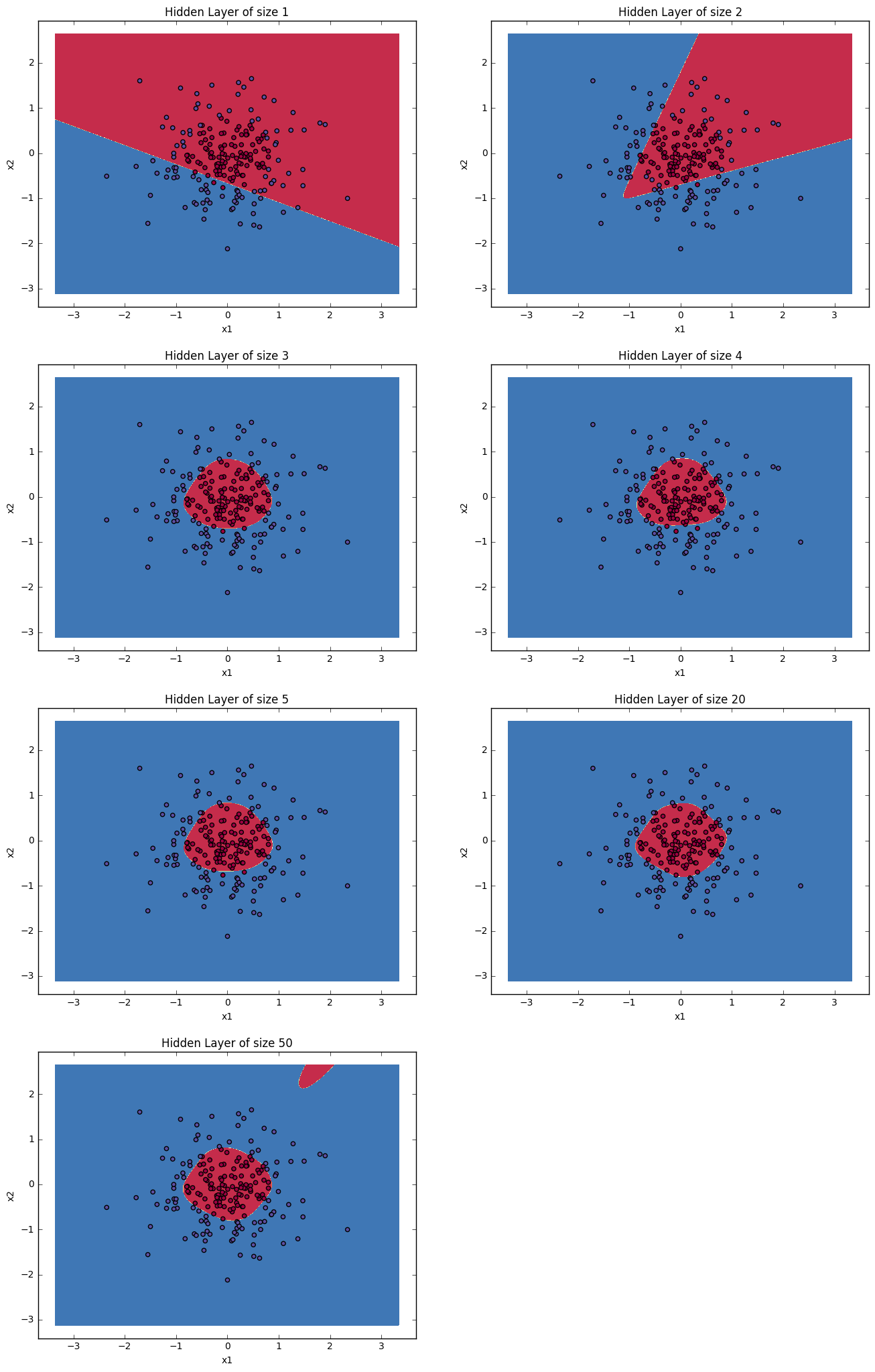

The model varied as we tested on various datasets and a set of hidden layers were used. That set is as follows; 1, 2, 3, 4, 5, 20, 50. Depending on the number of hidden layers used gave varying results. It is interesting to see the magic of these sort of algorithms warp themselves around the data.

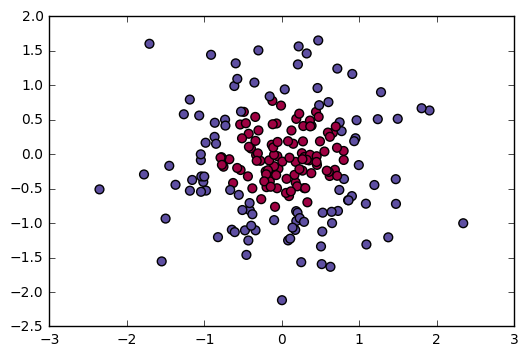

After finishing the graded portion of the project we were given some suggested datasets to play with. I will show you the results from that test below if your are curious. This is the "noisy_circles" dataset.

Here is the accuracy results and plots below.

Here is the accuracy results and plots below.

Accuracy for 1 hidden units: 59.5 %

Accuracy for 2 hidden units: 73.0 %

Accuracy for 3 hidden units: 79.0 %

Accuracy for 4 hidden units: 75.5 %

Accuracy for 5 hidden units: 79.0 %

Accuracy for 20 hidden units: 78.0 %

Accuracy for 50 hidden units: 78.0 %

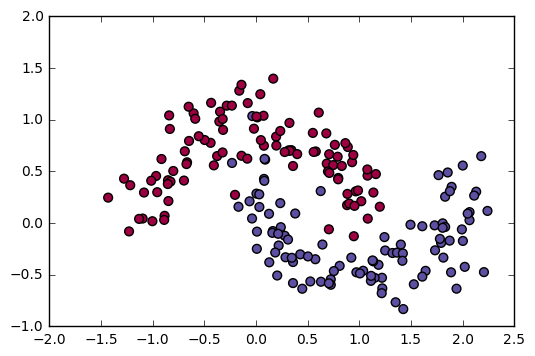

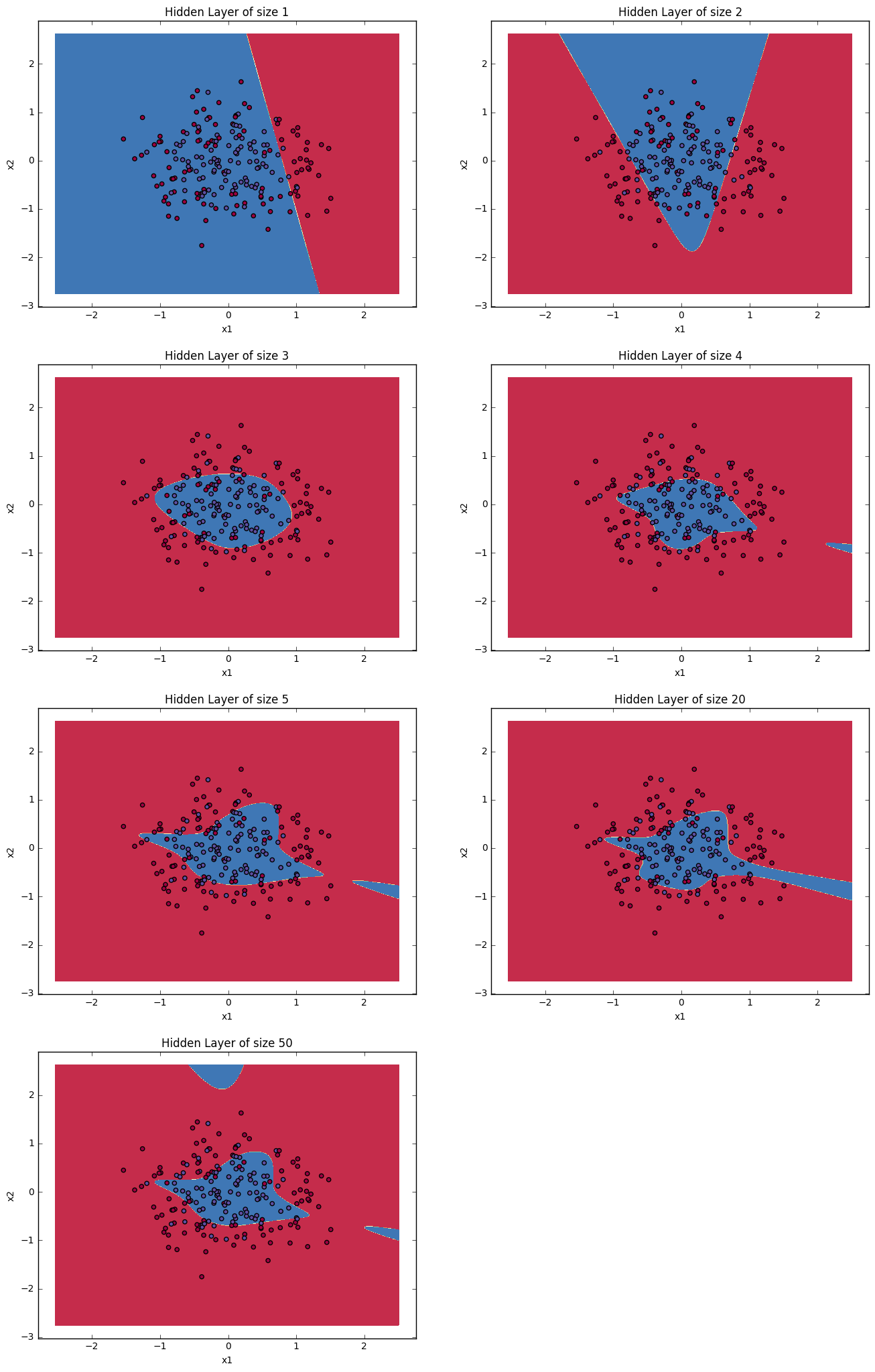

The next was "noisy_moons" dataset.

The results below.

The results below.

Accuracy for 1 hidden units: 87.5 %

Accuracy for 2 hidden units: 87.0 %

Accuracy for 3 hidden units: 97.0 %

Accuracy for 4 hidden units: 98.5 %

Accuracy for 5 hidden units: 87.0 %

Accuracy for 20 hidden units: 98.5 %

Accuracy for 50 hidden units: 89.5 %

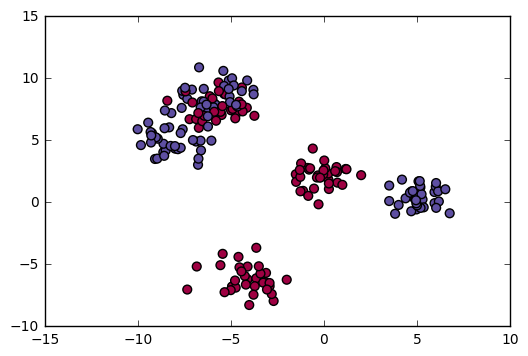

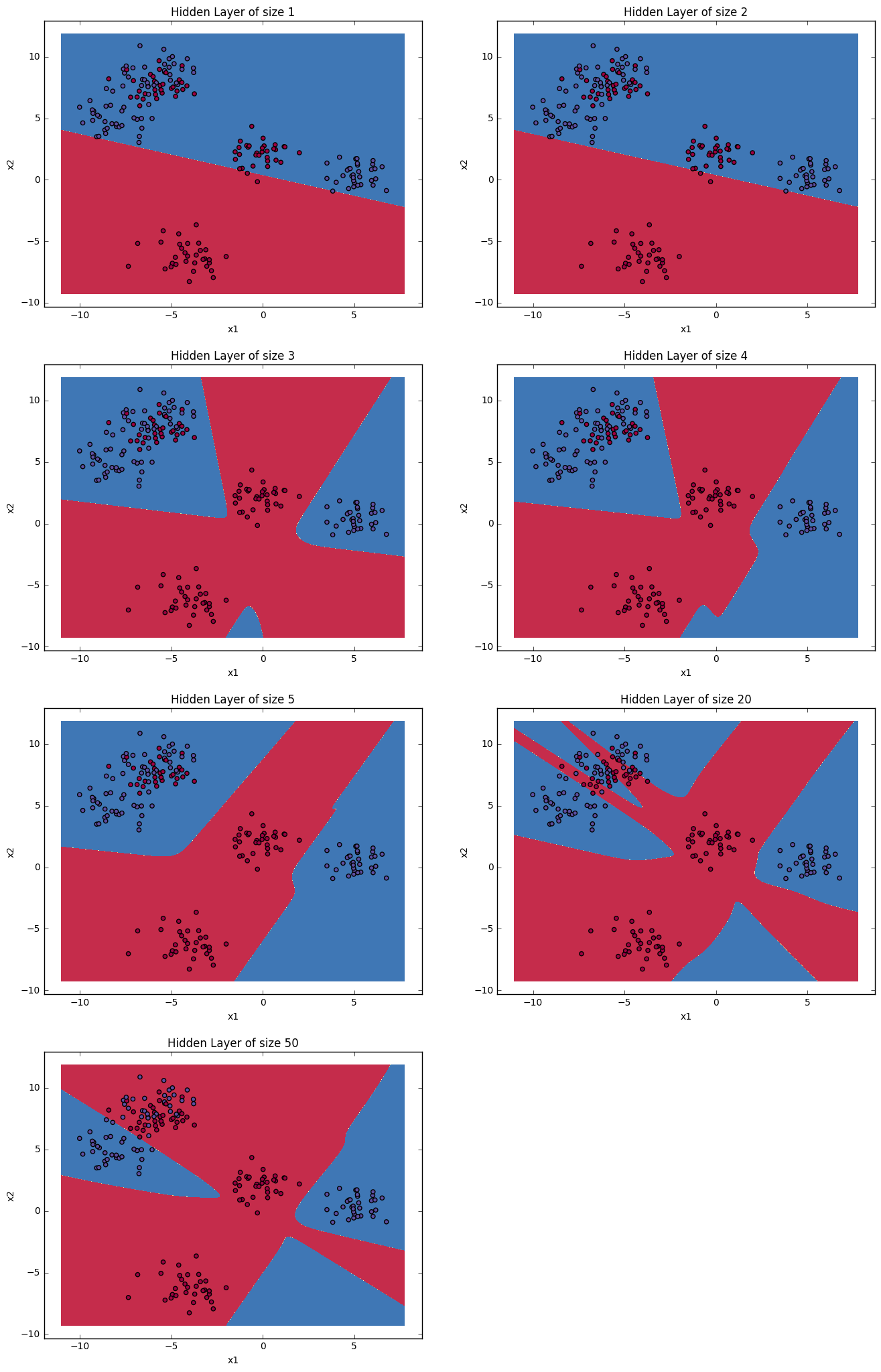

The next dataset was the "blobs".

The next dataset was the "blobs".

The results are below.

The results are below.

Accuracy for 1 hidden units: 67.0 %

Accuracy for 2 hidden units: 67.0 %

Accuracy for 3 hidden units: 83.0 %

Accuracy for 4 hidden units: 83.0 %

Accuracy for 5 hidden units: 83.0 %

Accuracy for 20 hidden units: 88.0 %

Accuracy for 50 hidden units: 83.5 %

The last dataset was the "gaussian_quantiles" datset.

The last dataset was the "gaussian_quantiles" datset.

The results are blow.

The results are blow.

Accuracy for 1 hidden units: 71.0 %

Accuracy for 2 hidden units: 84.0 %

Accuracy for 3 hidden units: 98.0 %

Accuracy for 4 hidden units: 97.5 %

Accuracy for 5 hidden units: 98.0 %

Accuracy for 20 hidden units: 100.0 %

Accuracy for 50 hidden units: 100.0 %

I am not going to go into such great detail as I did in my previous post because I got a lot of feedback from people telling me it was too long. So I will keep this short and sweet.

Any way, a nice cost function and a few derivatives with A LOT of matrix math and we have a neural network that is useful.

.. math:: s +s

Basically I learned a lot from this exercise. I learned the key components that go into developing a network and the effect the network has on varying datasets and I learned it rather quickly. It was clear and concise and I really have been enjoying this course. All the pieces involved in learning deep learning I feel are wrapped up more tightly in my mind after this course and it was a lot fun kind of re-going over somethings I learned previously in my Udacity course, but from a different perspective.